Synthesizing Network Accelerators

using Programmable Switching Equipment

Designing networked systems that take best advantage of heterogeneous dataplanes – e.g., dividing packet processing across both a PISA switch and an x86 CPU – can improve performance, efficiency, and resource consumption. However, programming for multiple hardware targets remains challenging because developers must learn platform-specific languages and skills. While some ‘write-once, run-anywhere’ compilers exist, they are unable to consider a range of implementation options to tune the NF to meet performance objectives.

We explore preliminary ideas towards a compiler that explores a large search space of different mappings of functionality to hardware. This exploration can be tuned for a programmer-specified objective, such as minimizing memory consumption or maximizing network throughput. Our initial prototype, SyNAPSE, is based on a methodology called component-based synthesis and supports deployments across x86 and Tofino platforms. Relative to a baseline compiler which only generates one deployment decision, SyNAPSE uncovers thousands of deployment options – including a deployment which reduces the amount of controller traffic by an order of magnitude, and another deployment which halves memory usage.

We explored a variety of hybrid NAT solutions from within the SyNAPSE search-space. These hybrid NATs partition functionality between the Tofino switch data-plane and its CPU running as controller, each optimizing for different goals.

What's interesting about these solutions and about SyNAPSE's ability to reason abut them, is that there is no silver bullet and no single solution can be called best in all regards. Different solutions exhibit different trade-offs, and operators may favor one solution or another, based on what performance metrics they care about and what their goals for the particular NF are. The SyNAPSE architecture allows developers to reason about these trade-offs and -- with the right search heuristic -- explore solutions that fit their needs.

no-offload

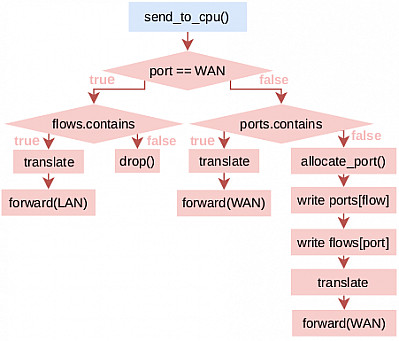

| no-offload Execution Plan |

This variant offloads the entire NAT logic to the CPU. As such, as packets flow through the programmable switch, they are all redirected to the controller. This seems like a silly solution, but in fact is the solution that best minimizes the amount of switch resources dedicated to the NF. This allows for better co-existence with other NFs within the same switch.

Source Code: P4

min-resources

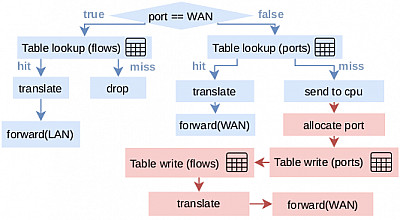

| min-resources Execution Plan |

Like no-offload, this solution also tries to minimize the amount of switch resources allocated for the NAT, focusing specifically on tables (SRAM). This allows for a degree of offloading of NAT functionality to the switch. As such, it stores translation information on the switch's tables, allowing packet translation almost entirely in the dataplane.

As tables cannot be modified by the dataplane, the first packet of every flow must be sent to the controller. Upon receiving a packet from a new flow, the controller updates the switch's tables with new translation information, allowing subsequent packets for the flow to be translated in hardware.

min-cpu-load

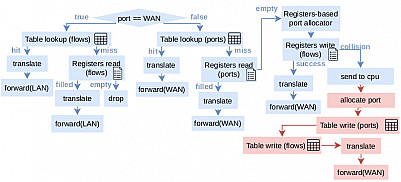

| min-cpu-load Execution Plan |

min-resources still relies heavily on the controller, as the first packet of every flow must be sent to the CPU in order to update the switch tables. This solution aims to make the switch data-plane largely independent, using not only tables but registers to store translation information and minimizing CPU intervention. Registers can be updated by the data-plane, and as such new flows don't go to the controller while they still fit in register space.

Register space is, however, a limited resource. When registers can no longer hold more flows (detected as hash collisions), this solution falls back to tables, sending packets to the controller.

max-throughput

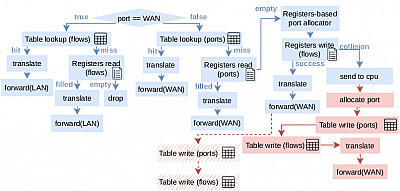

| max-throughput Execution Plan |

This solution aims to maximize network throughput and so unsurprisingly is the most performant. As with min-cpu-load, this solution leverages both tables and registers to store translation information, and allocates translations to the registers while they still fit (until the collide with other flows). However, whenever the data-plane allocates a new flow on the registers it sends a notification to the controller, which in turn reacts by moving that information from the registers to the tables, freeing up space for more new flows. This is done asynchronously, so traffic proceeds entirely in the data-plane meanwhile.

Unlike min-resources, most traffic need not be processed by the controller, as the data-plane mostly only needs to asynchronously notify the CPU and not forward entire packets. This can however increase CPU load, as each new flow will lead to a notification.

We performed a series of simulations to evaluate each of the solutions described above. The simulations report both the amount of traffic sent to the controller and the load induced on the CPU, for a given workload. We consider both empirical traffic traces with real Internet traffic, from Benson et al., and synthetic traces that induce varying amounts of churn.

Throughput

First, we look at the amount of traffic being forwarded to the controller, a typical proxy for throughput in programmable switches, as the data-plane operates at line-rate but the control-plane is much slower. More traffic going to the controller means worse performance.

Fraction of traffic sent to controller under empirical traffic.

Fraction of traffic sent to controller under synthetic traffic with variable churn.

Typical Internet traffic follows Zipfian power-law dynamics so churn is comparatively low. Under these circumstances min-resources sends all traffic to the controller -- as expected -- and min-cpu-load and max-throughput send three orders of magnitude less traffic to the controller. min-resources uses roughly half the switch SRAM though.

As we increase churn (measured in flows per minute -- fpm), the fraction of packets sent to the controller also follows. While this is true for all configurations, max-throughput is the least sensitive to churn -- by design.

CPU Load

We also looked at relative CPU load on the controller for each of the simulated hybrid NAT solutions, using the same workloads.

Relative CPU load under empirical traffic.

Relative CPU load under synthetic traffic with variable churn.

Min-cpu-load -- by design -- induces about 3 orders of magnitude less CPU load than the other solutions when subjected to empirical low-churn Internet traffic. Min-cpu-load readily beats the other solutions up to about 10 Mfpm but ends up performing worse than max-throughput under the extreme scenario of processing 100 million flows per minute. At this point, churn is so high that register space fills very quickly and min-cpu-load falls back to tables for most flows, behaving more like min-resources.

You can find more data and insights in our paper.

Francisco Pereira, Gonçalo Matos, Hugo Sadok, Daehyeok Kim, Ruben Martins, Justine Sherry, Fernando M. V. Ramos, and Luis Pedrosa. 2022. Automatic generation of network function accelerators using component-based synthesis. In Proceedings of the Symposium on SDN Research (SOSR '22). Association for Computing Machinery, New York, NY, USA, 89–97. https://doi.org/10.1145/3563647.3563656

@inproceedings{pereira2022synapse,

author = {Pereira, Francisco and Matos, Gon\c{c}alo and Sadok, Hugo and Kim, Daehyeok and Martins, Ruben and Sherry, Justine and Ramos, Fernando M. V. and Pedrosa, Luis},

title = {Automatic Generation of Network Function Accelerators Using Component-Based Synthesis},

year = {2022},

isbn = {9781450398923},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3563647.3563656},

doi = {10.1145/3563647.3563656},

booktitle = {Proceedings of the Symposium on SDN Research},

pages = {89–97},

numpages = {9},

keywords = {programming abstraction, network function virtualization, in-network compute},

location = {Virtual Event},

series = {SOSR '22}

}

All code used in the paper is available on GitHub. This includes:

- The four hybrid NAT variants we explored (P4 and Simulators).

- Code to generate the synthetic variable churn workloads.

- Code to generate the plots in the paper.

We tested our code on a fresh Ubuntu 22.04 installation. Dependencies include:

- g++, v9 or higher

- wget (to download the empirical workload)

- gnuplot (to generate plots)

- libpcap (to generate workloads)

The PCAP files we use during our evaluation are general purpose datasets and can be used to evaluate NFs in other contexts, outside of SyNAPSE. You can generate these files using the simulator runs script, or download them below (XZ compressed):

- univ2_pt1.pcap.xz - Empirical workload, directly extracted from Benson et al.

- churn_1000000_fpm.pcap.xz - Churn experiment with 1Mfpm.

- churn_10000000_fpm.pcap.xz - Churn experiment with 10Mfpm.

- churn_100000000_fpm.pcap.xz - Churn experiment with 100Mfpm.

To facilitate reproducibility, we have scripted the process of running the simulations, processing the data, and generating the plots from the paper. To reproduce all of our results, get the code and install the dependencies. Then run run.sh, from the simulator directory. The plots will be generated in the same directory.

The SyNAPSE analysis builds on top of the Vigor framework, using the KLEE symbolic execution engine and the Z3 theorem prover. The Vigor NFs we analyze build on DPDK. The NFs we generate for the Tofino compile for and use APIs from the Intel® Tofino™ Native Architecture.

Does your project use SyNAPSE? Let us know and we can link to it here!

Gonçalo Matos

Francisco Machado

João Tiago

This work was supported by the SyNAPSE CMU-Portugal/FCT project (CMU/TIC/0083/2019, DOI:10.54499/CMU/TIC/0083/2019), the uPVN FCT project (PTDC/CCI-INF/30340/2017), INESC-ID (via UIDB/50021/2020, DOI:10.54499/UIDB/50021/2020), and the Intel/VMware 3D FPGA Academic Research Center. F. Pereira is supported by the FCT scholarship PRT/BD/152195/2021.

© 2022, SyNAPSE Authors

Logo by imaginationlol - Flaticon